Experiments is a relatively new tool in Google Analytics and allow you to do A/B tests on your website. Read more to get started.

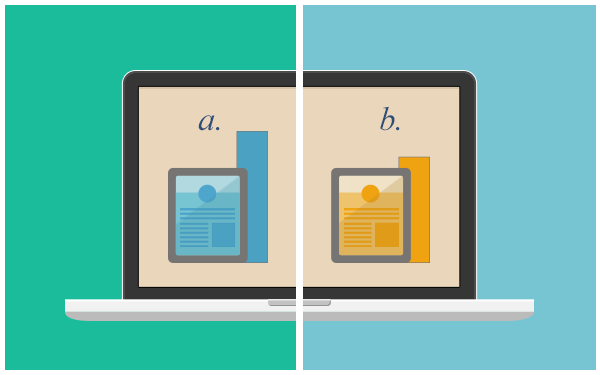

A/B testing uses two or more variations of the same webpage in order to test which one is generating the best results/conversions. Content Experiments let you test which version of a landing page leads to the greatest improvement in goal completion or metric value. With this tool, you can test up to five variations of a page.

Some of the key features Content Experiments bring to the table are:

- Only the original page script will be necessary to run tests, the standard Google Analytics tracking code will be used to measure goals and variations

- Any advanced segment can be used to test inside Google Analytics

- An improved analytics for your test pages, so that you can take better and more informed decisions

- A 2 weeks minimum to test page for critical mass – results won’t be shown only after 2 weeks of running the test

- Turning off tests automatically after 3 months, to prevent running the tests that have no chance to provide a winner

- The “Dynamic Traffic Allocation” functionality: traffic will be shifted away from low-performing variations and directed towards higher performing ones. This function was introduced in order to prevent poor-performing variations from doing extensive damage.

Let’s go through the process of setting up and using Content Experiments to do A/B testing inside Google Analytics:

Step 1: Create a new experiment

Inside Google Analytics (if you don’t have an account you need to create one), go to Content reports and on the sidebar, choose Experiments. You will be taken to a page that will show all your experiments. Click on the button “Create Experiments” – above the table and you will reach the following page:

At this stage, you can insert maximum 5 variation of the test page. In order to be sure the links are correct, you are shown thumbnails of these URLs on the side of the page. Once this is done, you can click Next.

Step 2: Set up the experiment options

There are basically 2 types of options you need to set up inside this step:

The first option is about setting up goals. There is a dropdown menu where you can choose the type of goal you want. Also, if you can’t find your type of goal in there, you can create a new goal. You have to set up a goal, otherwise how will you know which version of your website is the best?

Secondly, you need to select how many of the visitors to be included in the experiment – you can choose between 100%, 75%, 50%, 25%, 10%, 5% or 1%. It is not really recommended to drive 100% of you traffic to the experiment, especially if it’s a drastic one, in order not to damage your conversions.

Click Next in order to go to the next step.

Step 3: Add and check the code for the experiment

As mentioned in the beginning of the post, you only need one code to use on your test pages and now it’s the moment to choose if you will take and implement the code by yourself or send it to your webmaster to implement it:

Once the code is implemented, you can run a test to see if the experiment tracking is working. If the code is not implemented or not working properly, you will get the following message:

You also have the option to skip validation and continue. But it is still recommended that you check what’s wrong and get the checking in place. This way you will be sure the experiment in 100% working.

Step 4: Review your experiment

This is the final page – where you can review all the options you have selected for your experiment. If all is good, you can either save and run the experiment later or run the experiment right now.

Results of the Experiment

This is how a page of a running experiment looks like:

You have the options of Advanced Segmenting, Stopping the Experiment, Re-validation, Disable Variation and Conversion Rate. The Conversion Rate tab gives you the possibility to get the results of your test on several metrics.

A page with a winning variation looks similar to this one:

You can see the Road Runner (purple line) as the winner test page, with a lift of 28.5% in conversions as compared to the original.

Summary

The Content Experiment tool inside Google Analytics is a great tool, especially for the ones that are not testing so much. Still, the tools has a lot of room for improvement, things that are generally lacking for the ones that do quite a lot of testing. Some of these things to improve are:

- MVT (multivariate) testing

- E-commerce transactions as goals

- Remove limit of 5 variations per test

- Remove limit of 12 tests per profile at this time

- Option to use the standard tracking code for everything

A/B-tests with Google Analytics Experiment t.co/wksEUBiVOw #CRO pic.twitter.com/UxXvPlG9vV

— Carl Zetterberg (@carlzeta) December 28, 2014